This post is the second in a series of deeper dive articles on the four DORA metrics. The first metric we looked at was Deployment Frequency, which is about how often code can get released to end users. In this second article, we’ll look at Change Lead Time, arguably the metric most familiar to developers.

What is Change Lead Time?

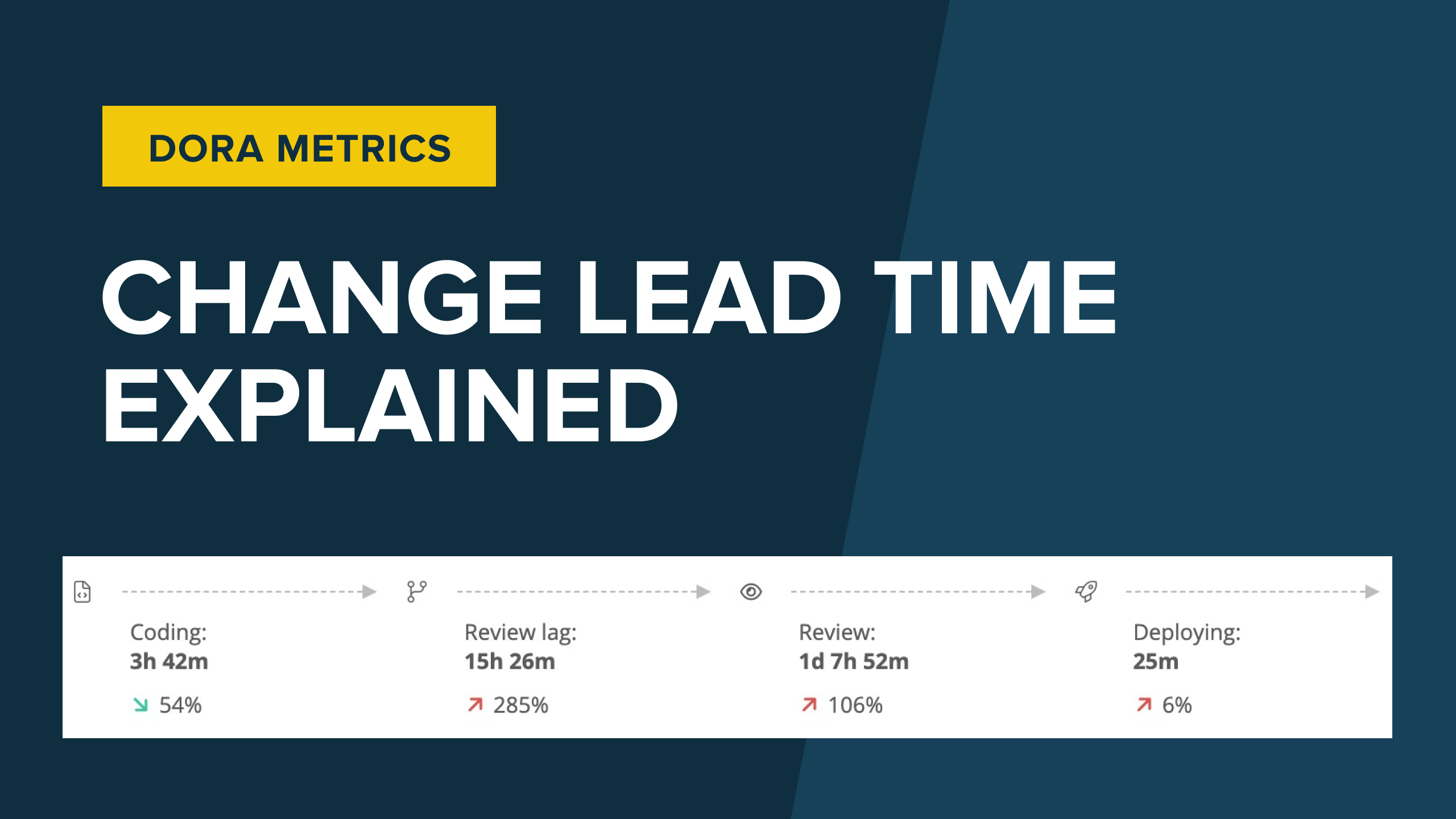

Similar to Deployment Frequency, Change Lead Time measures velocity. However, Change Lead Time tracks the time from when a developer starts writing code for a feature or a change to when that change is released to end users. Change Lead Time can be broken down into a few stages:

- Coding

- Code review

- Deployment

By measuring Change Lead Time spent overall and in individual stages, teams can get better insights into how their time is spent. The insights create opportunities for teams to improve and speed up their processes, ensuring that users get access to features faster.

Mature teams have a Change Lead Time that is measured in days. In fact, per DORA, elite teams have Change Lead Time of less than one day. In the case of mobile releases, we recognize that some processes require external validation like Apple’s App Store review that could take days. Still, within the context of processes that a development team can control, the maturity of a team can be determined by its Change Lead Time:

How do you improve Change Lead Time?

The key to improving Change Lead Time is ensuring that the tool you use provides direct, actionable feedback on what is happening and where bottlenecks are occurring. To improve Change Lead Time, teams should look deeper into the individual buckets (stages) where they spend their time. Let’s review ways to optimize the software development process by stage:

Coding

Once a user story or task is understood and the team has a clear definition of “done,” the developer can get to work writing some code. At this point, key optimization techniques are available to the developer.

Does the developer have the right tools and environment to validate that the code is working as expected? For more modern microservice-based architectures, teams can minimize wasted developer time by creating an environment that does not require locally running all services, but only the ones actively being worked on.

Automated testing

Architecture aside, the most fundamental part of writing code is validating that it meets the needs outlined in the user story. This implies the need for robust and reliable testing. In addition, this testing must be automated. A suite of unit tests, integration tests, and end-to-end tests ensures that the functionality developed can be validated at other stages of the development lifecycle.

Automated testing removes the need for manual intervention at the code review or deployment stages, since manual intervention here can create bottlenecks and consequently impact the Change Lead Time. Learn more about automating browser testing.

Code review

Before code is pushed to production, it goes through a review as part of the pull request process. This ensures that the changes made align with the description in the ticket (whether that be in Jira or Asana or some other system). Code review also tries to root out any bugs or instabilities, and is an opportunity to enforce company standards and conventions.

Code review is a stage in which automation can deliver outstanding value:

- Cleaning up code with linters

- Checking for unnecessary complexity or other code hygiene issues

- Executing tests and examining code coverage results

Ephemeral environments

During code review, tools like ephemeral environments can come in handy. If any manual validation is required, spinning up a test environment through a GitHub action or some CI/CD tool can potentially cut hours—or even days—out of the release cycle. Being able to quickly clean up test environments after completing validation is also a big win.

Review lag

One of the sub-stages of Code Review you want to track is Review Lag, which is how long it takes between the time the pull request is opened and reviewed. The lag could make up the largest portion of time being spent in Code Review, and it would be a fairly straightforward one to improve.

Check out these tips on how to improve the pull request and code review experience for both the developer and reviewer.

Deployment

The last bucket of time in Change Lead Time involves deploying that code to the end users. When we covered Deployment Frequency, we included lots of great tips and tricks on how to improve your process for getting code out the door. Hint: it’s all about tools and automation!

Canary release

Though the team needs to have confidence that any code released is free of defects, your process would also benefit from a mechanism to perform canary releases. By simultaneously releasing multiple versions of a system—one with the new code and others without—a team can start small in validating that the updated code is working as expected. As success metrics continue tracking at 100%, the other instances of the system can be switched over to the new version.

Canary releases also guarantee that if there are any issues, then only a small subset of users will be impacted, and the changes can be rolled back easily. Using an approach like this also drives maturity around the Mean Time to Recover (MTTR) metric, ensuring that bugs or issues in production get resolved quickly and easily.

Watch this video for more practical tips on how to reduce Change Lead Time.

The dos and don'ts of Change Lead Time

Do: Automated tracking

One major challenge in tracking Change Lead Time has to do with the data sources: they are stored in various systems. Tickets are typically stored in one system (for example, Jira). Source code is stored in another (such as GitHub or GitLab). Deployment information is stored in yet another system (such as CircleCI or Jenkins). Manually collecting, aggregating, and analyzing data across these different systems on a regular basis can be too time-consuming.

You’ll want to decide whether to build or buy a tracking solution that integrates with the different systems out of the box and accurately captures the data that make up Change Lead Time. Check out this comparison article for several commercial tools to consider.

Don’t: Skimp on accuracy

It’s important not to skip on Change Lead Time accuracy because essentially we’re measuring time allocations and investing our improvement efforts based on such allocations.

Sure, you can infer when a change has been deployed by looking at when the change is merged to main, but that could be quite inaccurate because of activities that take place after the merge, such as CI tests and deployments to the various environments, such as staging.

Don't: Focus on this metric alone

It’s also vital to ensure that teams aren’t “gaming the system”, which is why Change Lead Time should be seen as one DORA metric of many. Misreporting or skipping steps in the stages of the Change Lead Time cycle doesn’t support the team or the company in delivering a quality product. If teams shift the time burden to the user story-writing task before the Change Lead Time cycle, they might improve their Change Lead Time, but the Cycle Time metric will reflect no improvement. Similarly, if a team tries to speed up delivery by skipping testing, the Change Failure Rate metric will catch and address this practice.

Conclusion

Digging deeper into Change Lead Time allows organizations to understand how their engineers spend time on building features, implementing changes, and releasing new code to end users. This investigation can surface opportunities for process improvement, and those improvements often center around leveraging automation.

Change Lead Time also highlights the need for a tool that can aggregate information from various sources to help build a better understanding of where a team’s time goes. Individual optimizations may be helpful, but prioritizing individual metric improvements at the expense of a holistic understanding of the complete system may result in missed opportunities.